A Safer Way to Deploy Healthcare AI: Using the Confusion Matrix to Manage Risk

Healthcare AI will make mistakes. The executive challenge is not avoiding errors, but deciding which errors matter, how severe they are, and how they are detected before causing harm. In a HealthAI Collective lightning talk, Daniel Pupius explains how healthcare leaders can use confusion matrix thinking as a practical risk and governance framework to deploy AI safely, redesign workflows, and unlock operational ROI without compromising reliability or compliance.

Healthcare leaders are right to approach AI deployment with caution. Modern AI systems are probabilistic, not deterministic, and mistakes in clinical or operational workflows can lead to delayed care, compliance exposure, or patient harm.

Daniel Pupius, Fractional CTO at Float Health, described a familiar pattern. AI prototypes were easy to build and compelling. Shipping them into production took months. The blocker was not technology. It was stakeholder fear driven by unclear risk.

Executives were asking reasonable questions:

Without a shared framework, these questions stall progress.

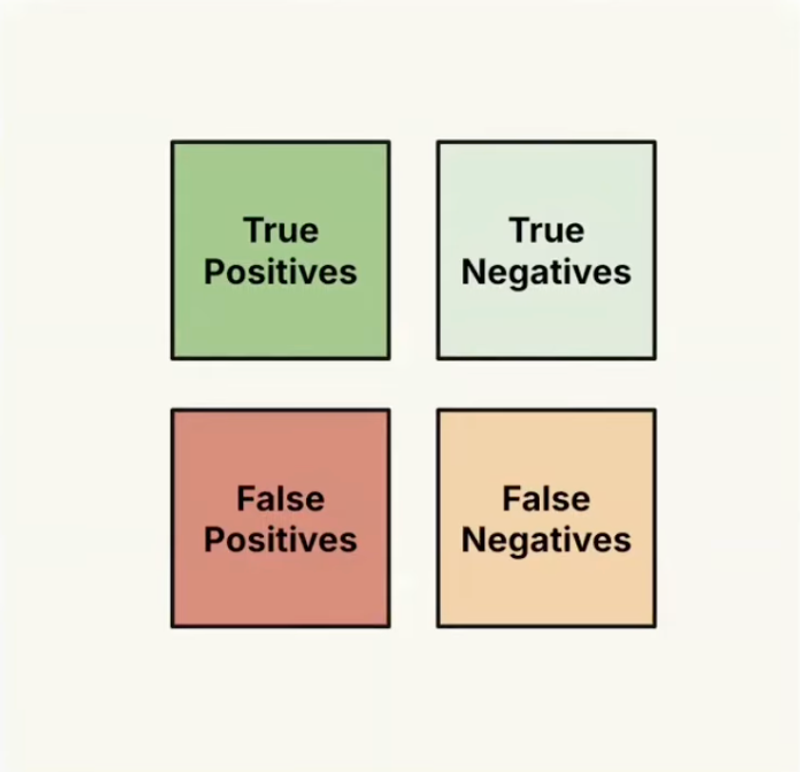

Traditionally, the confusion matrix is used by data scientists to evaluate classification models. Pupius repurposed it as an executive alignment and risk management tool.

Instead of abstract accuracy metrics, each quadrant is mapped to real operational outcomes.

The executive shift is simple but powerful. Not all errors are equal. Each quadrant carries different clinical, financial, and operational risk.

By explicitly defining severity, likelihood, and downstream mitigations for each outcome, teams move from vague fear to concrete decision-making.

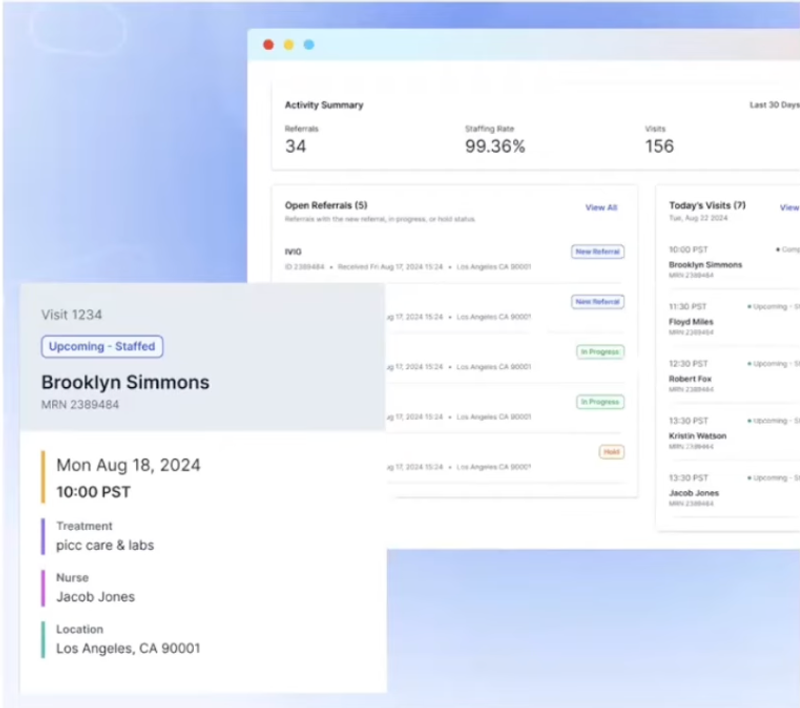

At Float Health, referrals arrived through email, phone, and fax due to legacy systems and behavior change challenges. Many were incomplete and relied on tacit human knowledge to interpret.

Errors cascaded quickly. Incorrect data could lead to the wrong nurse, delayed infusions, and potential patient harm.

The opportunity for healthcare automation was obvious. The risk of deploying AI without guardrails was unacceptable.

The confusion matrix made the trade-offs visible.

This clarity allowed leadership to approve deployment with confidence.

The most meaningful gains did not come from model sophistication. They came from workflow redesign.

By automating the happy path, Float Health reduced referral staffing time from an average of 20 minutes to roughly 10 seconds. More importantly, operators no longer needed to monitor inboxes in real time.

Work shifted from reactive, synchronous firefighting to asynchronous queues. Staffing utilization improved. Human expertise was reserved for edge cases where judgment mattered.

This is the core lesson for healthcare executives. AI delivers ROI when it reshapes workflows, not when it chases perfection.

False positives are dangerous because systems treat them as correct. Pupius addressed this by aggressively constraining the use case.

Instead of auditing every automated decision, the system focused attention only where risk was highest.

This approach mirrors how high-performing clinical teams already operate.

A recurring misconception is that AI must outperform humans in all cases to be valuable. Pupius challenged this directly.

Humans make mistakes more often than most technologists expect. Healthcare already manages this reality through quality control, escalation, and redundancy.

The winning model is AI plus human augmentation.

This framing increases clinician trust and accelerates adoption.

Daniel Pupius is a Fractional CTO at Float Health and Product Development Advisor at Product Health, helping startups scale through strong engineering, strategy, and organizational design. A former Staff Software Engineer at Google and Co-founder and CEO of Range, Daniel brings deep expertise in product architecture and leadership. He holds a BSc in Artificial Intelligence from the University of Manchester and an MA in Industrial Design from Sheffield Hallam University.

Confusion Matrix for Safer Health AI