Healthcare AI Data Handling Under HIPAA: How Executives Reduce PHI Risk Without Slowing Workflow

As healthcare organizations accelerate AI adoption, HIPAA compliance has shifted from a legal checklist to an operational design challenge. Executives are no longer asking whether AI can be used with PHI, but how to architect data flows that reduce risk without slowing care delivery. In a HealthAI Collective lightning talk, Adam El Gerbi offers a pragmatic view of HIPAA and AI data handling, focusing on how leaders can design workflows, access controls, and system boundaries that protect PHI while still enabling scalable, real-world AI use.

When AI is introduced into healthcare operations, HIPAA becomes a practical constraint on how everyday workflows are designed. Organizations that support physicians and handle patient information encounter compliance questions during routine tasks, not during formal reviews.

Handling patient demographics, eligibility checks, or data evaluation often requires moving sensitive information between systems. The risk does not come from intent, but from how these routine data exchanges are structured.

The key insight is not that organizations need exhaustive compliance programs. It is that leaders must understand where PHI is actually touched in daily operations and apply reasonable safeguards where risk is highest.

When AI systems interact with PHI, the stakes go beyond regulatory penalties or reputational damage. A single avoidable exposure can trigger cascading consequences across legal risk, partner trust, customer retention, and an organization’s ability to deploy AI at all.

Enforcement risk is real, and penalties can accumulate, particularly when organizations fail to conduct basic risk analysis or remediate known gaps. Many failures are not advanced or novel. They stem from foundational issues such as misconfigured portals, exposed electronic PHI, or missing controls.

A recurring problem is lack of visibility. Organizations cannot manage risk they cannot see, especially when data moves across systems and responsibilities are unclear.

This applies equally to business associates. If an organization supports providers, payers, or healthcare operations in any capacity, HIPAA liability follows the data. It cannot be treated as someone else’s problem.

A simple and effective way for executives to structure HIPAA risk discussions is to distinguish between data at rest and data in transit.

Data at rest

Data in transit

The highest risk often appears when PHI leaves its system of record. Examples include data copied into spreadsheets, pasted into external tools, or shared through workflows not designed to handle sensitive health information.

Most of these safeguards are familiar to security leaders. Their executive value lies in how they function together as an operating baseline for AI deployment, not as isolated compliance tasks.

The following controls represent a reasonable and defensible standard when AI workflows interact with PHI:

Core safeguards

These controls reduce downside risk while allowing teams to modernize workflows with confidence. They also create auditability, which becomes essential once AI systems begin touching sensitive operational data.

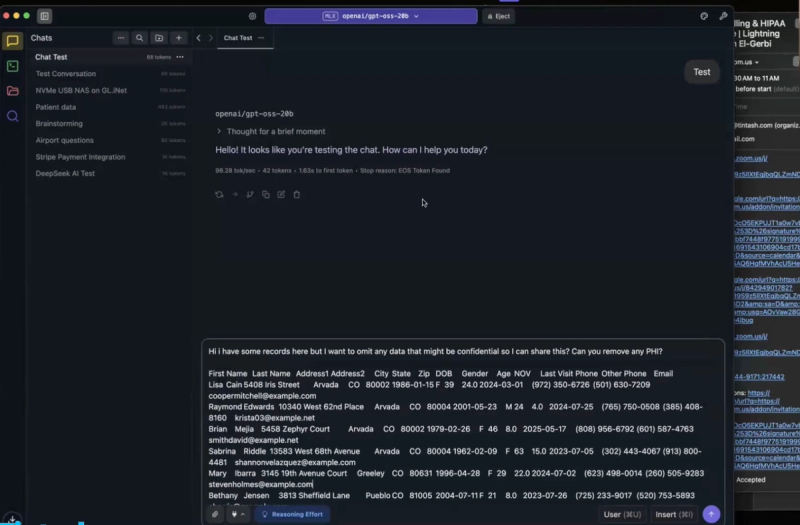

One of the most practical patterns discussed was a local-first approach to AI data handling. Instead of sending raw data directly to hosted AI tools, teams can process sensitive information locally first.

In this pattern, a local language model runs entirely within a controlled environment, such as a laptop, without sending data to the internet. A common use case is removing or masking PHI from datasets so that safer versions can be used for internal analysis, training data, or downstream workflows.

This is not an argument against hosted models. Hosted tools may still be better suited for complex tasks. The operational insight is that local tools can act as a preprocessing layer, reducing exposure before data ever leaves a controlled environment.

This pattern is governance friendly because it establishes a clear boundary. PHI remains inside controlled environments unless and until it has been intentionally transformed.

In healthcare organizations, compliance is often cited as the reason execution slows down. In practice, delays are frequently caused by unclear processes and coordination gaps rather than unavoidable HIPAA requirements.

A useful executive litmus test is turnaround time. A few working days for sensitive data requests can be reasonable in complex environments. When basic reports or access requests take weeks, the issue is usually operational friction, not regulatory constraint.

When compliance functions as an enabler rather than a blocker, organizations move faster without increasing risk.

Adam brings 15+ years of technology leadership across healthcare, real estate, entertainment, and other sectors to MedAlly. He architected solutions ranging from healthcare SaaS platforms and ML systems to multi million dollar infrastructure transformations for organizations of all sizes. After gaining insights into the concierge medicine industry, Adam recognized an opportunity to bring greater efficiency and value to this market. He founded MedAlly to help physicians with concierge medicine through strategic guidance, technical excellence, and partnership focused support.

AI Data Handling & HIPAA in Healthcare